At AnalogFolk we decided to improve the process to book meetings rooms using Slack bot and Google Calendar.

Check out the article: https://analogfolk.com/blog/smarter-meeting-rooms

At AnalogFolk we decided to improve the process to book meetings rooms using Slack bot and Google Calendar.

Check out the article: https://analogfolk.com/blog/smarter-meeting-rooms

“It’s pointless having a website if no-one can find it!”

Before we continue, I’d just like to state that there is no Silver Bullet about SEO, these recommendations come from personal experiments. The algorithms that rank the pages are not publicly available and even though I might mention Google as a synonym for search engine, you should always consider who you’re targeting. E.g. Yahoo has a relevant share in the US compared to the UK, and Baidu is the “Google” in China.

“SEO is a way of creating more visibility on Search Engines such as Google, Bing! and Baidu, by ranking websites higher in search results, which in turn lead to more click-troughs and conversions.”

To improve your Search Engine Results Page (SERP) ranking, there are on-the-page factors like Code / Markup and Content, and off-the-page factors, such as Links / Social. By ranking higher in the SERP, you increase your visibility and reputation, which in turn lead to sales / engagement. See below a great infographic about SEO.

Although the Technical Improvements have the smallest impact of the improvements I’ll be mentioning in terms of reputation, they are the first step to get your website pages visible / indexed.

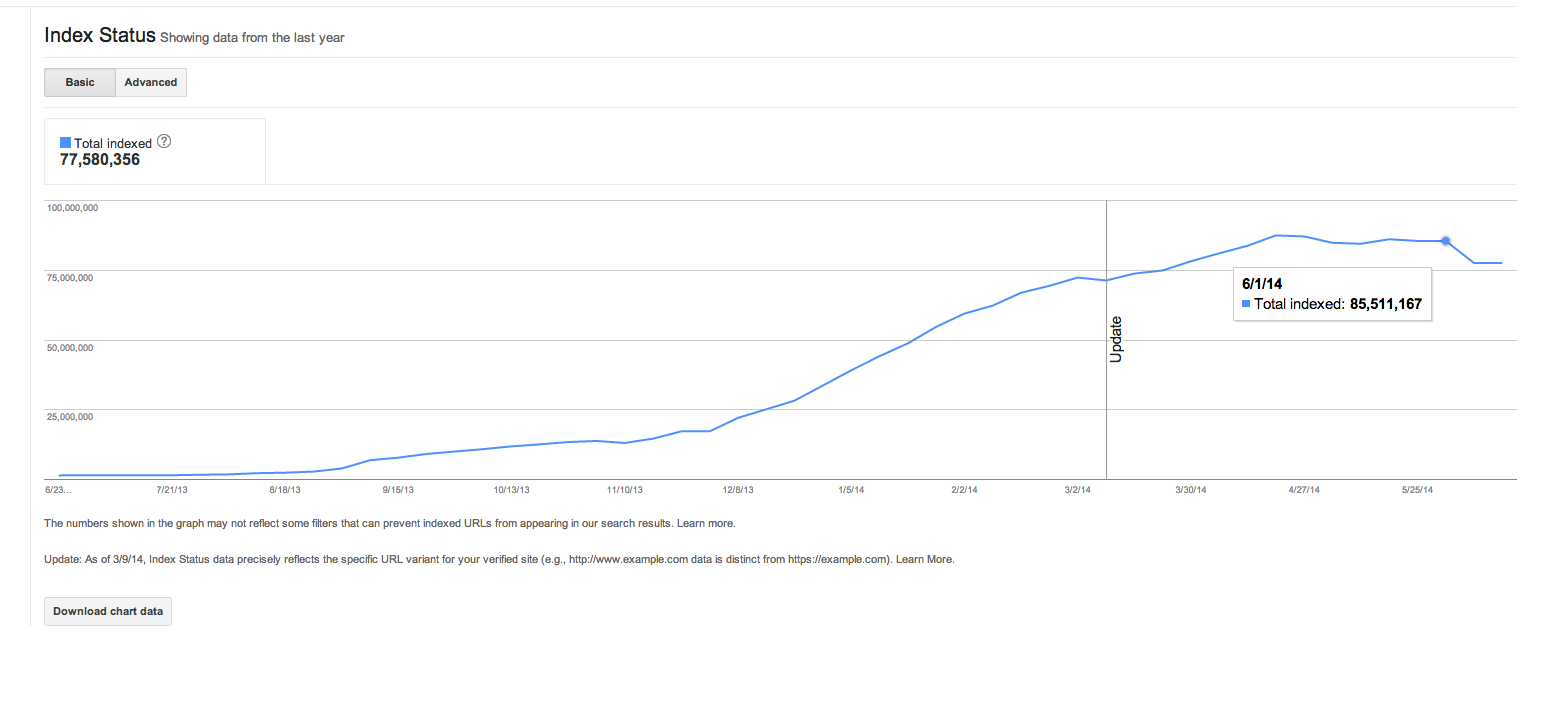

Shopcade is a social / e-commerce platform (something like Facebook meets Amazon). At the time they had a catalog of 100M products, but only a fraction of those products were indexed / discoverable, so the mission was to get most of the catalog showing up on the SERPs.

After implementing most of the technical improvements (code / markup), the site went from 34K indexed pages to 85M on Google in a year! I believe I have your attention now…

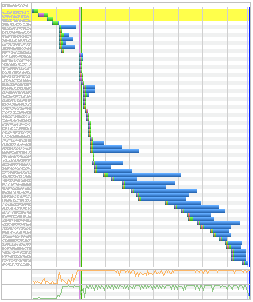

We got this graph from Google Webmaster Tools (GWT) and we could monitor which improvements had better results (we also added comments on Google Analytics every time we made an improvement, so we could track better).

A Web crawler is an Internet bot which systematically browses the World Wide Web, typically for the purpose of Web indexing. A Web crawler may also be called a Web spider, a bot, an ant or an automatic indexer.

A spider is a program run by a search engine to build a summary of a website’s content (content index). Spiders create a text-based summary of content and an address (URL) for each webpage.

Below is an example of how Humans perceive a webpage. We can clearly understand what is important, what is the title, and where is the Call to Action (CTA), etc.

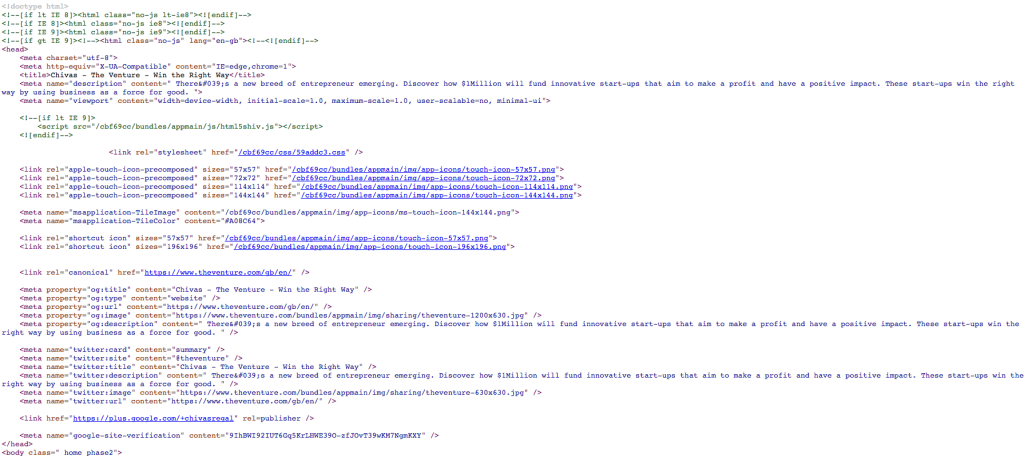

And below is an example of how a Spider would “understand” the same website. So we need to make use of the appropriate HTML tags to help the spiders understand the content and importance of the page, by using title tags, semantic tags, and because they don’t “read” the images content, alt tags for images.

Improper configuration might prevent the pages to be indexed

By showing different content depending on the User-Agent the site might be penalized

Slower sites will be penalised. See more on Why should we care about website performance

This helps the automated “crawlers” understand the content of the images

The Canonical URL is the owner of the content, even if there are multiple URLs that serve the same content. This avoids penalization for content duplication. e.g. <link rel=“canonical” href=“http://example.com/page-a”>. Pagination tags example <link rel=“next” …> and <link rel=“previous” …>

e.g., http://www.example.com, https://www.example.com, http://example.com, http://example.com/ should all redirect to only one of them, like https://www.example.com/, this avoids content duplication, which means multiple pages serving the exact same content. An alternative is to make use of the canonical meta tag.

Use tags like article, section, aside, summary, …

H1 being the most important to H6 the least

This should be relevant to the actual content of the page. Should not be longer than 50-60 characters, as Google truncates the rest in the search results. You should avoid duplicate meta titles

This should be relevant to the actual content of the page. Should not be longer than 150-160 characters, as Google truncates the rest in the search results. You should avoid duplicate meta descriptions

Whenever a page is moved, we should implement a 301 (permanent) redirection to the new page to keep the same value as it had before (link juice). 404 pages should return the http status 404 and should have useful links back into the site. Broken Links are bad User Experience (UX) therefore penalized.

These tags, help the spider to understand the content of the website, and allow those companies to better represent the content of the page on a snippet. Google Publisher will eventually add more credibility to the site, by showing your latest posts and profile on the top right side of the search results page

Submitting sitemaps helps the Search Engines to be aware of your latest pages, updates, etc

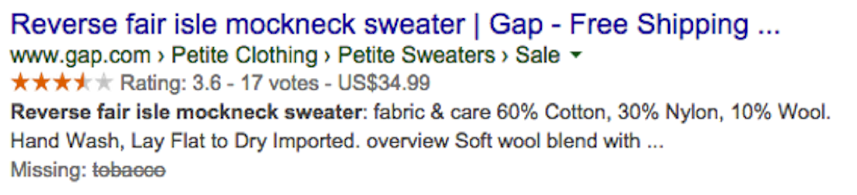

By using this special markup, “spiders” will be able to better understand the content and provide rich snippets on the search results. Available formats can describe a Product, a Person, Comments, Reviews, etc. Also take advantage of Google Now Cards by using schema.org for email (airline ticket, bills, …).

Below an example of a rich snippet with ratings, price, …

Optimize the website for mobile, by creating a specific mobile website when possible. Search results vary if made from a desktop or from a mobile device

They provide very insightful data about how is your site performing. GWT also provides SEO recommendations

Which one is sexier? http://example.com/page.php?p=918726 or http://example.com/awesome-article

The URL string is also searchable, which helps your page to be discoverable.

Avoid making the URLs too deep, if it takes many clicks to get there, then the content is less relevant or important. Also, if we make categories like http://example.com/product/product-name, http://example.com/product/ should also be available as a page that contains products… URLS are case sensitive

Below we have an example of URLs being highlighted in search results:

So, after implementing those improvements, it’s time to see if they’re working.

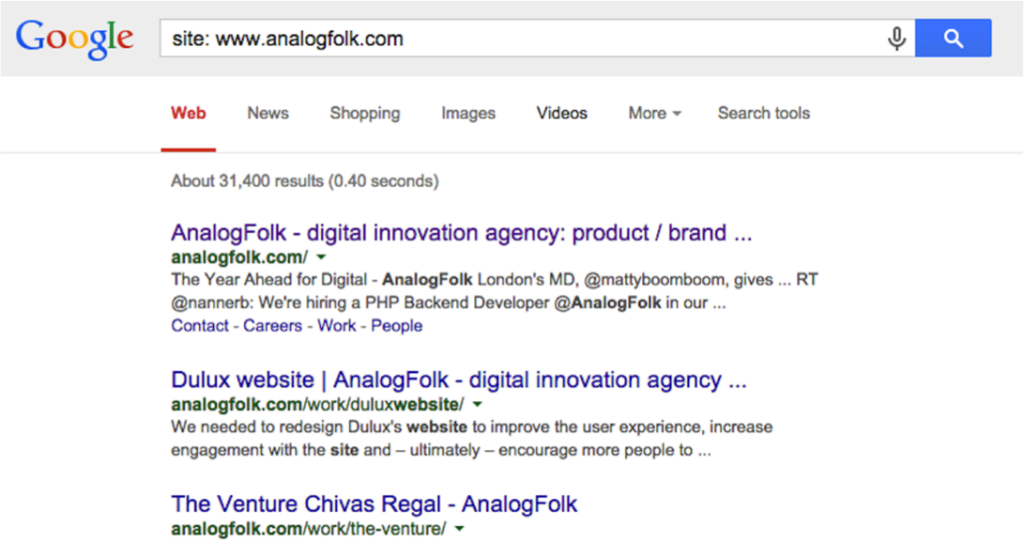

Getting your site indexed is just one of the steps. You can verify that your site is being indexed by searching for “site: www.sitename.com”

Now that we’re sure the site is being indexed, we want to rank higher than our competitors for the selected keywords. About 85% of the users find what they’re looking for in the 1st search results page. We should clearly define which keywords are relevant to our content, e.g. Lucozade as a keyword vs energy drink or feeling down… If a user searches for the brand, they will already know what they want, so we should try to target the related keywords in order to increase discoverability.

The website was being properly indexed, but performing poorly (not showing on the top results).

By implementing a series of non-technical improvements, Shopcade consistently started to rank even higher than the actual brand that also sold the products, consequently improving the click-through rate by 50% – 80%.

Write fresh, well written, unique quality content. One of the most important factors for SEO. Sell through content

Use the blog to keep users engaged and add more content. Use the blog to cross-sell some products / articles. Some blog are engaging and funny, and as a side effect, they lead users into your website

Users who are informed are more likely to buy. As an example, we have the eBay Buying Guides, that explain what to look for in a certain product. They also use it as an opportunity to cross-sell by suggesting a few products

e.g. simply prepending “35% Off” Product Name on the Shopcade product pages title, made the users click this site instead of others

Keep users engaged by suggesting similar/recent/top products/articles. This means users will spend more time on the site and more likely to come back. It also allows Search Engines to discover your content more easily. Create lists about your products, create articles like Who the famous “TV SERIES” Are you? – Target the intended keywords to drive traffic into your site

Not only it adds unique content and freshness, it also helps other users to make the purchase / keep engaged. Be aware of spam comments and links (you should considering adding “nofollow” to those links). One of the downsides of allowing users to add comments is risk of being bullied by the users, and it might imply an overhead due to moderation

Find out which keywords are trending and create content around it to try to capture a few more users into your website and then convert them. E.g. Super Bowl, create an article or a product list targeting the competition, and them try to convert the users

Find out how your users are discovering your website and try to explore it even further. Either by creating content around it or by doing more of what is currently converting better

Easier to rank due to less competition and the more specific the higher the intention. E.g. “Adidas Originals ZX Flux” as opposed to “adidas running shoes”

“Each link back to your site is a vote…”

Share your articles / content in Social Media accounts and allow users to share and spread the word. Consider owning a #hashtag. Create competitions to keep users engaged and go viral

Engage other users / bloggers to write content / reviews about your product (should be natural and not paid)

Authority website links will count more than regular and “spammy” websites

All links should feel natural. Having links to other websites, is like voting for those websites. Avoid linking to spammy websites and don’t impose linking back

What have I missed? Feel free to comment.

Even if we optimize all the aspects, we might still have a website that’ll take longer than we wished for. We can make use of several techniques to make the site apparently faster

We’ve seen in Website Performance Optimizations how we can make the website load faster. But there are times we can combine these improvements with others that will help to make the site apparently load faster. Even if the website is still loading, the user visualises most of the page in the shortest time possible, which translates in a good user experience and the perception that the site is fast.

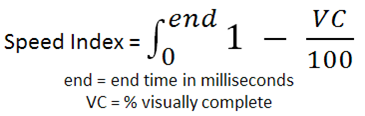

The Speed Index is the average time at which visible parts of the page are displayed. It takes the visual progress of the visible page loading and computes an overall score for how quickly the content painted. The lower the number the better.

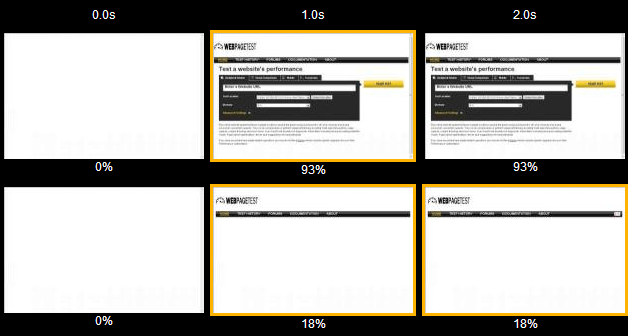

Let’s say the following website takes 6s to load. We can see the difference of a good apparent speed, by rendering 93% of the content in 1s and load the remaining 7% in 5s, and a not so good speed index, in which after 1s only 18% was loaded in 1s and only at 5s it loaded around 90%.

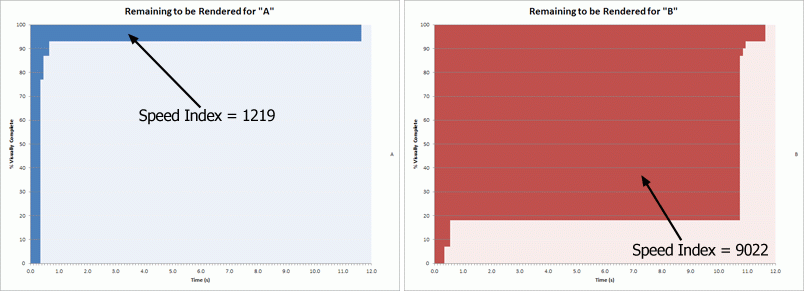

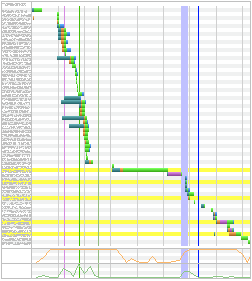

If we plot the completeness of a page over time, we will end up with something that looks like this:

The progress is then converted into a number by calculating the area under the curve:

When I worked at shopcade.com, as part of a decision to provide a better user experience and also rank higher in search results, we’ve decided to tackle the page loading time (real and apparent). These improvements lead to higher conversion rates, but also, we started getting more and more indexed pages by Search Engines, and more frequently indexed. This eventually brought a lot of organic traffic to the website, along with other specific SEO improvements.

So, by applying the already mentioned performance optimizations (we also tweaked the backend framework, to get a much faster response time – Time to First Byte), we got these results:

The DOM content loaded was triggered just after 0.6s, and we still triggered some scripts asynchronously, after the Content Download trigger. Check the before and after results on Webpagetest.org.

N.B. Just 1.3 seconds were to download a web font.

Below we can see an example of poorly optimized website:

And here a better one:

What have I missed? Feel free to comment.

After you’ve read about Why Should We Care about Website Performance? I’ve come up with a list of potential optimizations:

Remove comments, remove unnecessary HTML, JS and CSS.

Combine the JS files and libraries into one JS file and minify. The same for CSS to reduce number of requests.

Loading CSS first, prevents additional repaints and reflows, and will make the page look much better from the beginning and JS in the end to allow for the page to be rendered without being blocked while loading the scripts.

This way, the page rendering won’t be blocked and triggers the Document loaded event (e.g., $(document).ready()), much sooner. All social media plugins and analytics should be loaded asynchronously.

This way we avoid excessive number of requests. Each request has costs from DNS Lookup, SSL negotiation, content download. If the image is small enough, the cost of DNS and SSL is higher than the asset itself.

Make an effective use of cache. Enable caching for assets, DB queries, templates / pre-render templates, but also implement Cache Busters to allow invalidating the cache when the assets are updated. One preferred URL fingerprint is /assets/9833e4/css/style.css, as some of the other solutions might have problems with proxies and CDNs (e.g., some CDNs ignore the query string parameters).

It may save a lot of time, since the cookies are sent for every request and may be as much as 4Kb of overhead per request.

e.g., static.domain.com, static1.domain.com, etc, as browsers usually have a limit on how many concurrent connections they establish with each domain, which means, the first set of assets, needs to be downloaded before starting new connections.

Google CDN is usually very fast, and physically close to the client. And the client might already have the asset cached.

Enabling compression (e.g. GZIP) to make the file size much smaller to download. With jQuery ou can get a gain of 88% when compared to the original size – jQuery (273K), Minified (90k), Compressed (32K).

Move them into files to make them cacheable. *Depending on each specific case.

If we only need a 50x50px image, just serve that image. Don’t rely on the browser to resize, as it will still download the full size image.

Remove unnecessary data from images (strip irrelevant information), compress, and if it is a JPEG, make use of the progressive version, as this will make the image start appearing sooner.

Each redirect costs time, so avoid unnecessary redirections.

Nginx is very fast and optimized to serve static content.

If the content is not important for SEO or another reason, consider triggering the load, only after the page is served.

This reduces the load on each server, which translates in faster response times.

Nearly half of the web users expect a site to load in 2 seconds or less, and they tend to abandon a site that isn’t loaded within 3 seconds, according to Akamai and Gomez.com

The performance of websites is critical for the success of businesses. A well-performing website improves a lot the user experience. It’ll keep your audience coming back, staying longer and converting a whole lot better. It is also one of the measured signals for search results rankings, and usually appears higher than less performing websites. Mobile devices have become so significant today that a website should always consider the limitations of those devices.

Disclaimer

Just to mention that these optimizations should be considered on a project per project basis. Some improvements might not be worth considering, depending on budget, project duration, time schedules, available resources, etc.

Typical WordPress themes are crammed full of features. Many will be third-party plugins, styles and widgets the author has added to make the theme more useful or attractive to buyers. Many features will not be used but the files are still present.

A boilerplate may save time but it’s important to understand they are generic templates. The styles and scripts contain features you’ll never use and the HTML can be verbose with deeply-nested elements and long-winded, descriptive class names. Few developers bother to remove redundant code.

Developers are inherently lazy; we write software to make tasks easier. However, we should always be concerned about the consequences of page weight.

Each request takes time to process, as it includes time for DNS Lookup, SSL negotiation, Server Response, Content Size, Connection Speed, etc. Also, broswers impose a limit on simultaneous connections.

High quality images may look very nice, but we should consider the cost of downloading them in slow connections and/or mobile devices

Websites not optimized for mobile users usually suffer from issues like bloated graphics, non-playable videos and irrelevant cross-linking. Google recently implemented a change on the ranking algorithm that favours mobile optimized websites on mobile searches.